How I Built an Automated Newsletter Using 6 Sources & N8n

Aug 05, 2025

I used to have a team member spend their entire Monday morning doing one thing: reading the internet.

They’d check The Verge, TechCrunch, and a half-dozen other sites, hunting for the latest AI news. They would copy-paste links and summaries into a shared document, trying to build a weekly intelligence report for the rest of the team.

The problem? By the time the report was finished, it was already 24 hours out of date. It was a manual, soul-crushing process that burned over 10 hours of valuable time every single week, just to produce something that was obsolete on arrival.

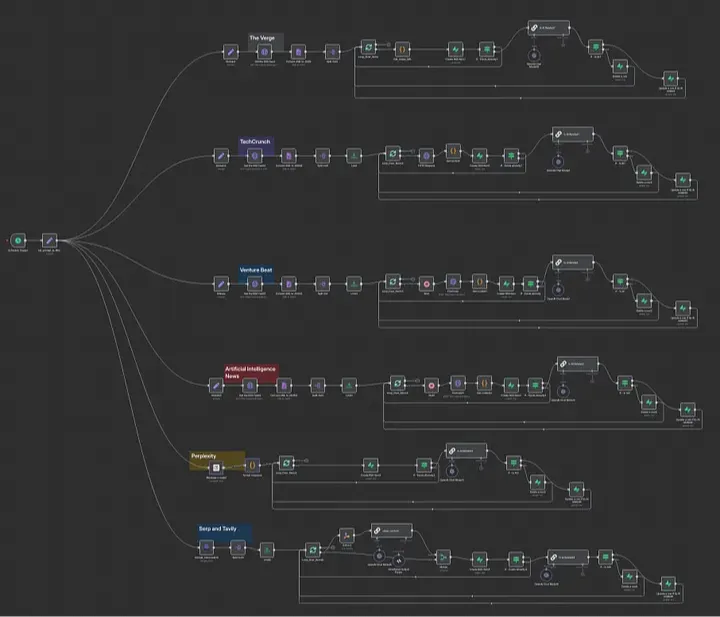

Building My Automated AI Newsletter: The Six-Source Engine

When I set out to build my automated AI newsletter, it was about more than just staying informed. I wanted a system that would not only keep me ahead of the AI curve, but also serve as a marketing channel — bringing in new leads, building authority, and running itself in the background. But as I quickly learned, every news source had its own quirks and challenges. Here’s how I tackled each one.

1. The Verge: Wrestling with RSS and Images

The Verge seemed like an easy win. Just grab the RSS feed, filter for “AI,” and you’re done, right?

Not quite. The XML was bloated, and image URLs were either missing or buried deep in the feed. I had to write custom code blocks in N8N to reliably extract images and clean up the metadata. The real “aha!” moment was implementing a global system prompt — so I could filter for true AI relevance in one place, instead of tweaking every node.

Then finally, my feed was clean, focused, and ready for newsletter blocks.

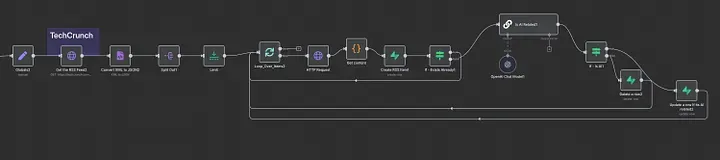

2. TechCrunch: From Snippets to Full Stories

TechCrunch’s RSS feed only gave me snippets — never the full story.

That’s not enough for a newsletter that’s supposed to deliver real value. My solution? A two-step process: first, pull the RSS, then trigger a second HTTP request for each article link to scrape the full content. Of course, the HTML was inconsistent, so I built a custom parser to extract titles, summaries, and tags.

It took some trial and error, but seeing those complete articles flow into Supabase was a huge win.

3. VentureBeat: Adapting to a Moving Target

VentureBeat’s RSS feed was incomplete, and their HTML structure seemed to change every other week.

I found myself constantly tweaking my extraction logic, adapting to new layouts and missing fields. But with every iteration, the workflow got smarter. Eventually, I had a reliable pipeline that could handle whatever VentureBeat threw at me, ensuring I never missed a key AI business update.

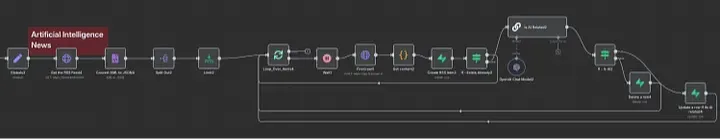

4. Artificial Intelligence News: The “Easy” Source That Still Needed Work

Artificial Intelligence News looked like a break — focused content, mostly consistent structure. But even here, field names would shift and the occasional malformed entry would slip through. I built a lightweight, adaptable parser that could handle minor changes without breaking. It was a reminder: even the “easy” sources need attention if you want true automation.

5. Perplexity: My AI-Powered Research Assistant

Perplexity was a turning point.

Instead of waiting for news, I could actively search for the latest AI developments. The challenge was making sure my queries were always up to date — so I built in dynamic date handling and strict filters to exclude irrelevant topics.

The result? A feed that gave me the freshest, most relevant stories every day.

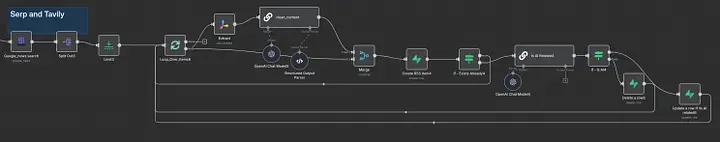

6. SerpAPI & Tavily: Data Cleaning Chaos

Google News is a goldmine, but snippets aren’t enough — and every site structures content differently. I used SerpAPI to run targeted searches, then piped each result through Tavily to extract the full article, no matter the source.

For the really messy sites, I brought in GPT-4.1 mini to clean and structure the content from the various sources (since each site had their own spam and ads that I had to clean up.). This was the most complex workflow by far, but it gave me a comprehensive, cross-platform view of the AI landscape.

Bringing It All Together

All these sources, all these workflows, feed into a single Supabase database — filtered by my AI agent for true relevance. What started as a chaotic mess of feeds and formats is now a streamlined, automated system that not only keeps me informed but now brings new and fresh leads into my business every single day-on automatic!

If you want access to this full workflow, you can join my newsletter — and soon, you’ll get the complete N8N template as part of our growing library of automations. No matter where you are on the Five-Level Automation Framework, you’ll find something here to help you scale your impact and save hours every week.

Get Access to the Automation Library now!

From 80-Hour Weeks to 4-Hour Workflows

Get my Corporate Automation Starter Pack and discover how I automated my way from burnout to freedom. Includes the AI maturity audit + ready-to-deploy n8n workflows that save hours every day.

We hate SPAM. We will never sell your information, for any reason.