How to Turn a Single Photo Into a Talking Avatar (Automated Workflow)

Jan 21, 2026

I recently posted a demo on LinkedIn featuring digital avatars mimicking human motion. The response was massive, seeing over 24,000 impressions and hundreds of comments.

Why? Because companies are desperate for content, but traditional video production is slow and expensive. It requires cameras, lights, and actors. We are currently stuck at Level 1 — doing everything manually.

Today, I’m walking you through a workflow that pushes you to Level 2 and Level 3. We are going to use AI to “puppet” any image using a reference video. The goal isn’t just to make cool videos; it’s to build a system that creates content while you sleep.

The Good, The Bad, and The Swollen Arms

I tested this workflow using the Kling 2.6 model. I used a few celebrity images for educational purposes to see how the model handled familiar faces.

The Good:

- Motion Accuracy: The AI tracks movement incredibly well.

- Detail: It handles things AI usually hates, like teeth and complex facial angles.

- Physics: The hair movement is consistent and looks real.

The Bad:

- Anatomy Glitches: In one test, Henry Cavill ended up with swollen arms — it looked painful.

- Likeness Drift: Sometimes the face loses its identity slightly during rapid movement.

Even with the glitches, this is faster than hiring an animation team. We are taking a static image and a reference video, and merging them.

Building the Puppeteer Engine

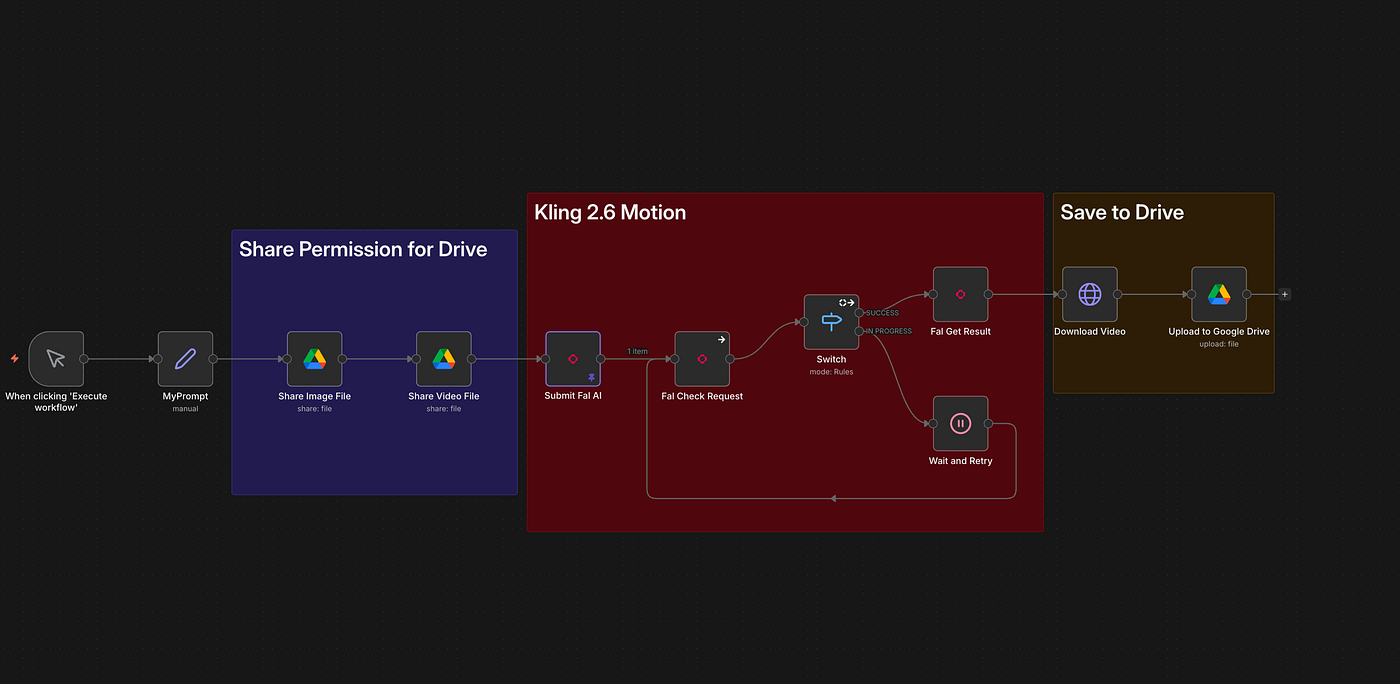

We don’t do this manually; we automate it using n8n. Think of n8n as a digital assembly line. We put raw materials in one end, and a finished product comes out the other.

The Workflow Logic:

- The Trigger: You start the process (form, chat, or schedule).

- The Inputs: You provide the Source Image (the face) and the Driver Video (the motion).

- The Processor: We send these assets to Fal AI running the Kling 2.6 model.

Handling the Technical Handshake

A specific hurdle is Google Drive permissions. Your AI tools need to “see” your files. In n8n, we automate the sharing process by taking the file ID, changing the permissions to “anyone with the link,” and generating a direct URL. If you skip this, the automation fails.

Once accessible, we send a request to Fal AI via a Community Node. You will need your API keys and select the kling-video-v2.6-standard (or Pro) model.

The Waiting Room

Video generation takes time (e.g., 7 minutes for a 5-minute video). If you ask for the video immediately, the system will error out. We build a loop:

- Send Request: “Hey Fal AI, make this video.”

- Wait: The system pauses for 30 seconds.

- Check Status: “Is it done yet?”

- Repeat: If “in progress,” we wait again.

- Completion: Once “Completed,” the system downloads and saves it to Google Drive.

Why This Matters for Your Business

This is about scalability. Imagine a CEO avatar: you record one video of an actor speaking, and you can now drive that avatar with new audio and motion without the CEO ever entering a studio. That is Level 3 automation.

Next Steps

We will eventually integrate ElevenLabs to transform voices into different accents or genders to match the avatar perfectly.

If you are not tech-savvy, I have provided the full detailed instructions, n8n code, and steps in our Corporate Automation Library (CAL).

Click Here to gain access to CAL.

We have over 60+ high impact, high ROI automations, including:

- Automatic LinkedIn post generators.

- Cloning yourself as a presenter.

- Newsletter automation.

- Lead scraping (50,000+ leads).

Stop building from scratch. Start automating.

Ritesh Kanjee

Automations Architect & Founder

Augmented AI (121K Subscribers | 58K LinkedIn Followers)

From 80-Hour Weeks to 4-Hour Workflows

Get my Corporate Automation Starter Pack and discover how I automated my way from burnout to freedom. Includes the AI maturity audit + ready-to-deploy n8n workflows that save hours every day.

We hate SPAM. We will never sell your information, for any reason.