Nanoagents vs. Generalist Agents: Which is better?

Nov 24, 2025

Two architectures. One complex migration workflow. We ran the experiment to see which approach actually survives the real world.

There is a debate quietly raging in corporate AI strategy. On one side, you have the believers in raw power: one massive, omniscient model that handles everything. On the other, you have the proponents of structure: a swarm of tiny, specialized agents working in concert.

We didn’t just want to theorize about which was better. We wanted to prove it.

So, when a client came to us with a broken document workflow, we decided to run a live experiment. We built both systems. We fed them the same complex tasks. We measured every hallucination, every dollar, and every second.

The answer to “which one is better?” ended up being far more decisive than we expected.

The Arena: A Migration Nightmare

The client was an Australian migration company dealing with high-stakes visa applications. Their reality was chaos. They were relying on a single shared ChatGPT account where staff would copy and paste text in and out of a chat window. They stored long, cumbersome prompts in Notion, dug up old chats, and manually searched for changing regulations.

It was slow, fragile, and untrusted. This was Level 1 automation: manual, ad-hoc, and unscalable.

We proposed building a custom document processor to handle their files — title pages, legal arguments, evidence linking, and summaries. But how should we build the engine?

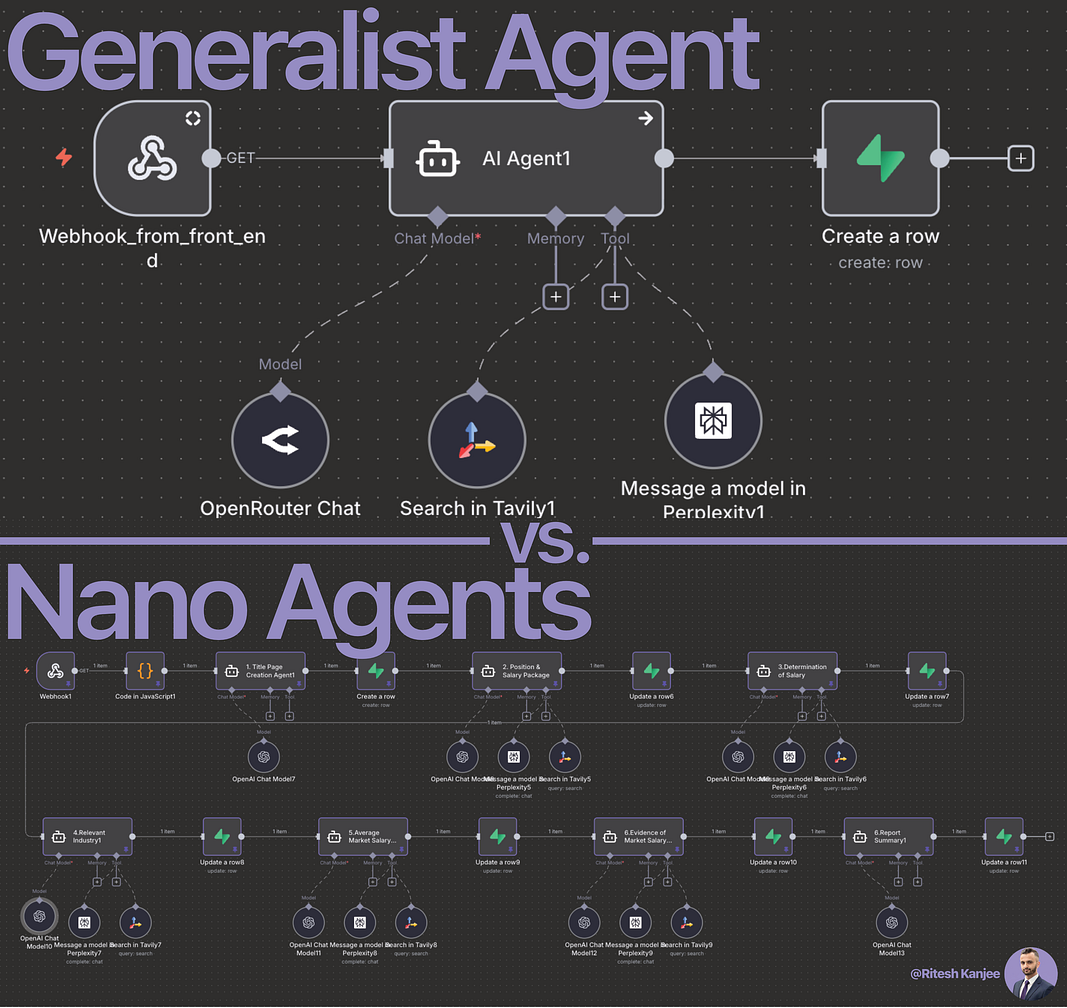

I had two architectural choices:

- The Heavyweight (The Generalist): One massive system prompt. One powerful model. All tools attached. A single “brain” attempting to do it all.

- The Swarm (The Nanoagents): A team of small, hyper-focused agents. Each owns one step. Each has limited tools. Each stays in its lane.

We decided to build both and see which one survived.

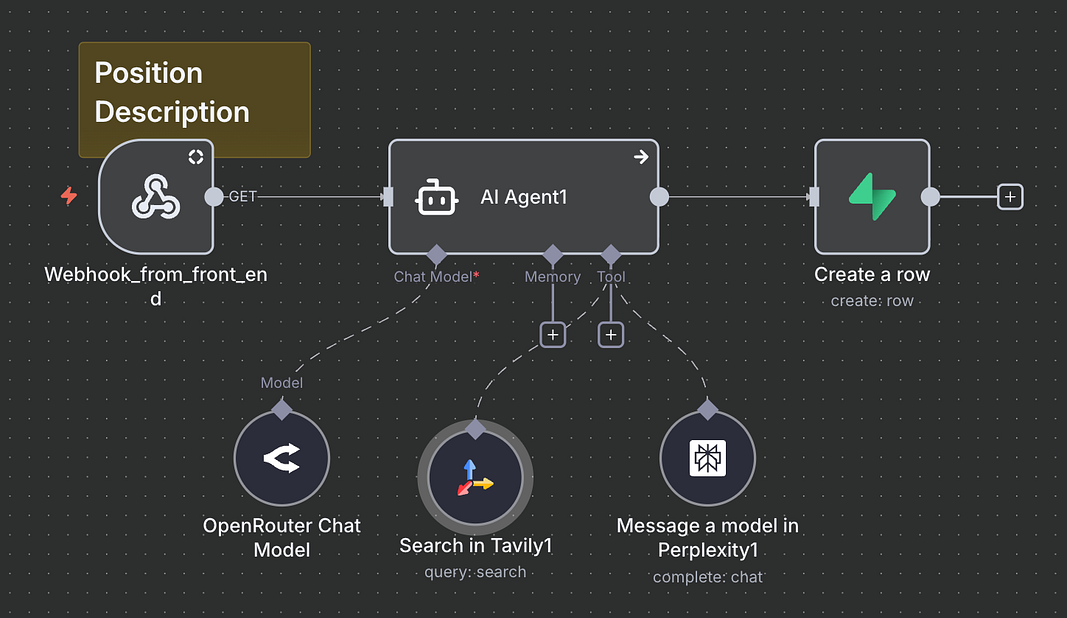

Contender 1: The Generalist

The Generalist approach is seductive because it feels simple. We set up one robust agent powered by GPT-5.1. We gave it a comprehensive system prompt explaining the entire migration document structure. We gave it access to the web, file reading tools, and formatting instructions.

The Promise: “You are an expert migration lawyer. Take this client file and generate the complete submission document.”

It sounds like magic. You talk to one thing, and it does the work.

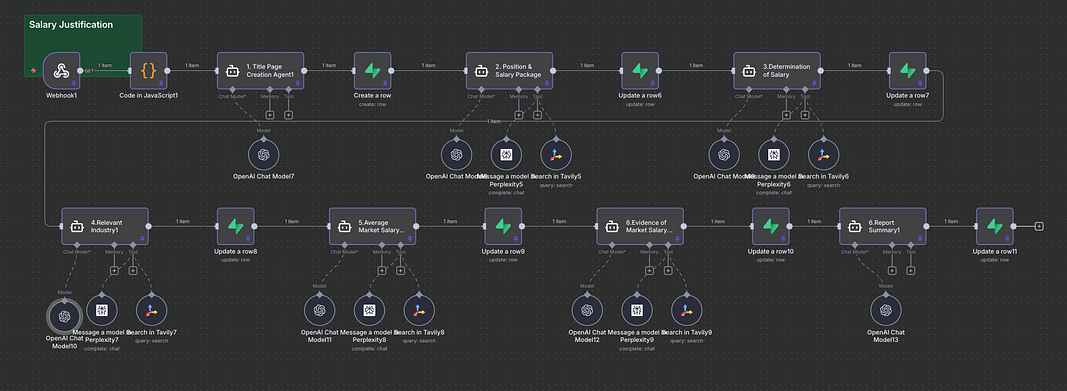

Contender 2: The Nanoagents

The Nanoagent approach feels complex at first glance. Instead of one brain, we broke the workflow into five distinct workers:

- Title Page Agent: A small model that only formats dates and names.

- Introduction Agent: A mid-tier model that only writes the opening narrative.

- Body / Evidence Agent: A powerhouse model (GPT-5.1) focused purely on legal reasoning.

- Web / Regulation Agent: A specialist that only scrapes and retrieves current laws.

- Summary Agent: A reviewer that checks the output for risks.

The Promise: Structure over raw intelligence. No single agent knows the whole picture, but every agent is an expert in its tiny slice of reality.

The Experiment

We ran over 100 hours of tests. Different client profiles. Different visa subclasses. Different levels of complexity.

Round 1: Setup and Speed

The Generalist was faster to build. You write one prompt, you plug it in, and you go. The Nanoagents took time; we had to orchestrate them in n8n, ensuring data passed cleanly from the Title Agent to the Intro Agent, and so on.

Early advantage: The Generalist.

Round 2: Accuracy and Drift

Then we started the heavy runs.

The Generalist began to struggle. With a system prompt spanning pages of instructions, it started to hallucinate. It would confuse formatting rules with legal rules. It would hallucinate a regulation because it was trying to be “creative” with the introduction. It was juggling too many balls.

The Nanoagents, however, were boringly consistent. The Title Agent never tried to give legal advice; it didn’t know how. The Regulation Agent never messed up the formatting; it didn’t have formatting tools. Because the prompts were short and specific, “drift” was almost non-existent.

Round 3: The Debugging Nightmare

When the Generalist failed, it was a black box. Did it fail because of the web search? Did it lose context? Did it prioritize the wrong instruction? Fixing it meant tweaking a massive prompt and hoping you didn’t break something else.

When a Nanoagent failed — say, the Introduction was weak — we knew exactly where to look. We didn’t touch the legal logic; we just tweaked the Intro Agent.

The Verdict

After the dust settled, we looked at the data.

The Generalist agent was a jack-of-all-trades and master of none. It was expensive to run (using high-power tokens for simple tasks) and prone to confusion.

The Nanoagents were surgical. By using small, cheap models for simple steps and expensive models only for complex reasoning, the cost per document dropped. By isolating tools, we eliminated security risks where an agent might browse the web when it shouldn’t.

The Winner: Nanoagents.

The results were not close. The Nanoagent architecture delivered:

- 50% time savings in processing.

- Lower costs by matching models to tasks.

- Audit-ready logs for every step of the process.

Why Structure Beats Intelligence

The curiosity here is why the “smarter” single agent lost.

It turns out that you do not have a document problem. You have a structure problem.

When you ask one agent to be a receptionist, lawyer, researcher, and writer simultaneously, you are setting it up to fail. When you break those roles down, even a “dumber” model can outperform a genius.

But you don’t have to run these experiments yourself.

Inside our Corporate Automation Library (CAL), we have over 60+ battle-tested workflows ranging from Sales and Marketing to Content Generation and Operations.

For every single use case, we have already fought this battle. We tested Generalists vs. Nanoagents and selected the winning architecture for that specific job. We did the R&D so you don’t have to.

Stop guessing. Start deploying.

Click here to access the Corporate Automation Library

Ritesh Kanjee | Automations Architect & Founder

Augmented AI (121K Subscribers | 59K LinkedIn Followers)

From 80-Hour Weeks to 4-Hour Workflows

Get my Corporate Automation Starter Pack and discover how I automated my way from burnout to freedom. Includes the AI maturity audit + ready-to-deploy n8n workflows that save hours every day.

We hate SPAM. We will never sell your information, for any reason.