What Are The Best Tools For Edge AI?

Mar 24, 2022

Cloud computing involves the provisioning of computation power and memory to construct a flexible, cost-efficient computing paradigm. It has come a long way since its inception in the late 1990s. According to recent reports, the global cloud computing market is valued at USD 368.97 billion. Simultaneously, Artificial Intelligence(AI), especially hardware-taxing Deep Learning, has seen massive development. Development that might not have been utilized without the explosion of cloud computing.

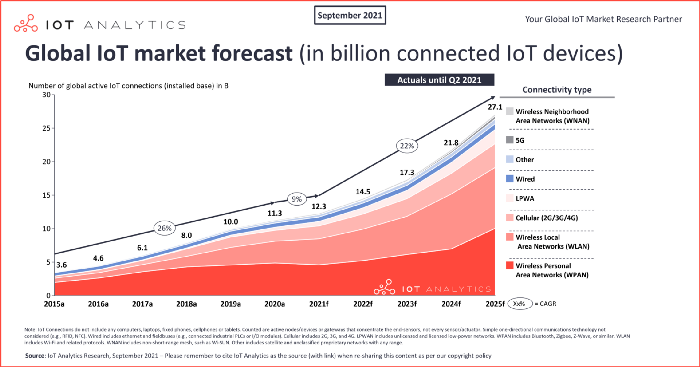

The relentless growth of IoT devices( Source: IoT Analytics)

Even so, cloud computing has its limitations. With the rapid growth of the Internet of Things (IoT) devices, data transfer itself becomes an issue. About 850ZB of data is generated by IoT devices at the edge of the network, but the total traffic to the worldwide data center only reaches 20.6ZB. Besides that, pushing the AI frontier back to datacenters increases the latency of models. Data privacy is also a rising concern. Organizations and people are usually not keen on sharing (potentially sensitive) data with commercial cloud providers.

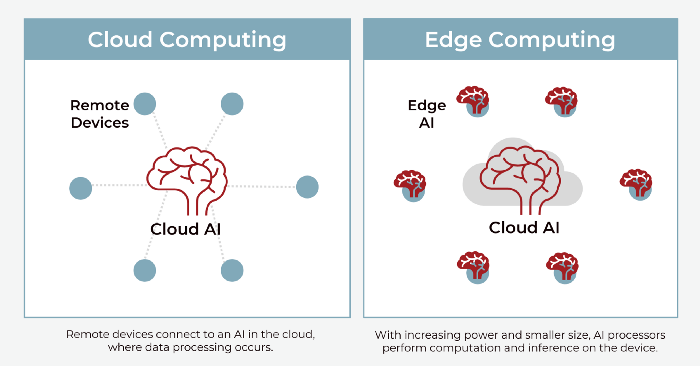

(Source: CardinalPeak)

All these apprehensions are addressed by the fast-evolving paradigm of edge computing. It goes beyond the centralized data centers and leverages the increasing computational power and smaller size of processors to perform computations closer to the edge. That being said, edge AI is still in its infancy due to resource-constrained IoT devices and a lack of efficient algorithms.

In this article, we’re going to learn about current solutions to these issues: Jetson Nano, i.e., power edge devices and Deep Learning Compiler, TensorRT, more efficient models for edge devices.

Jetson Nano

Jetson Nano is the latest in NVIDIA’s Single Board Computers (SBCs) line. Think Rasberry Pi, but with a dedicated NVIDIA GPU. Although the newer Rasberry Pi 4 has a more powerful ARM Cortex-A72, Jetson’s Maxwell GPU with 128 CUDA cores and a whopping 472 GFLOPs dwarfs Pi’s integrated Broadcom GPU. Besides the increase in computation power, having a dedicated NVIDIA GPU opens the door for a plethora of optimizations (like TensorRT).

Source: AugmentedStartup’s Jetson Nano Course

Take for instance SSD MobileNetV2. It runs at an abysmal 1FPS on the Raspberry Pi natively, and 11 FPS with the Intel Movidius Neural Compute Stickaccelerator. While the Jetson runs it at a blazing 39 FPS. And the trend is similar across the board for other computer vision models.

And as an SBC made for artificial intelligence workloads, Jetson Nano comes with multiple neural networks and supports the most popular AI frameworks (including TensorFlow, PyTorch, Caffe, and MXNet).

TensorRT

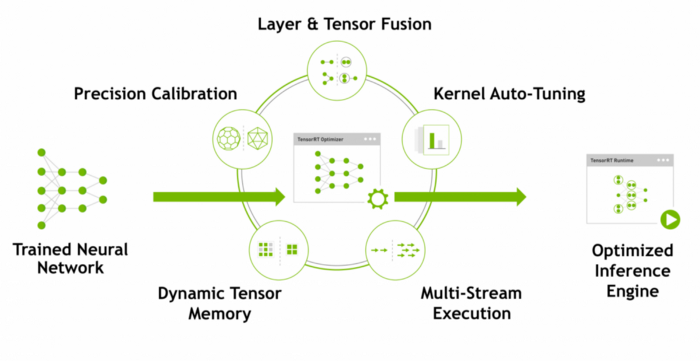

NVIDIA’s TensorRT SDK provides a deep learning optimizer and runtime that helps you to create more efficient versions of trained models that deliver lower latency and higher throughput. Tensor-RT-based applications can perform up to 40 times faster than their CPU-based counterparts during inference.

It provides INT8 and FP16 optimization support for production deployments of deep learning inference applications. The reduced precision calculation makes the inference time significantly lower. This reduced inference time is essential for applications that need real-time predictions.

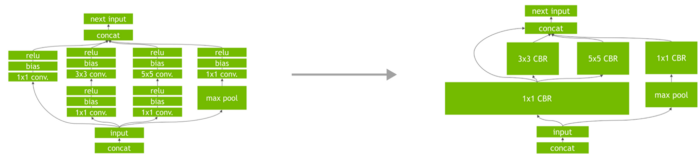

TensorRT eliminates layers with unused outputs to avoid unnecessary computations. It also fuses convolution, bias, and ReLU layers wherever possible. It’s important to note that these optimizations don’t change the underlying computations, they restructure the network to perform the operations more efficiently.

DeepStream

The Jetson line brings more computation power to the edge, TensorRT makes more efficient versions of deep learning models for faster inference. But there is still an issue, we rarely work with one camera, most applications have multiple cameras and sensors. So there’s a need for a high-density stream analytics platform.

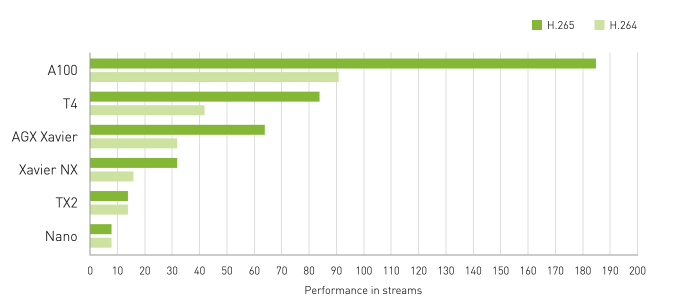

Stream density defines the number of camera feeds or data streams that can be processed simultaneously.

stream density achieved at 1080p/30 FPS (Source: developer.nvidia.com)

That’s where DeepStream comes in, it enables developers to apply AI to streaming video. DeepStream simultaneously optimizes video decode/encode operations, image scaling, conversion, and edge-to-cloud connectivity for complete end-to-end performance optimization.

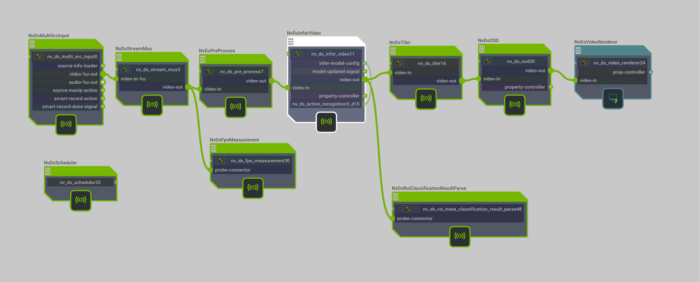

Graph Composer (Source: developer.nvidia.com)

In the 6.0 release, DeepStream introduced Graph Composer; a powerful low-code graphical programming option. An intuitive interface that abstracts a lot of the underlying DeepStream, GStreamer, and platform programming knowledge required for creating designs that address the latest requirements in real-time, multi-stream Vision AI applications.

Want to learn how to leverage this potent combination of Jetson Nano and TensorRT to deploy state-of-the-art computer vision models such as YOLOX? Check out our Jetson Computer Vision Course! We take you through everything. From setting up the Jetson Nano and installing deep learning libraries to training, optimizing, and deploying computer vision models.

From 80-Hour Weeks to 4-Hour Workflows

Get my Corporate Automation Starter Pack and discover how I automated my way from burnout to freedom. Includes the AI maturity audit + ready-to-deploy n8n workflows that save hours every day.

We hate SPAM. We will never sell your information, for any reason.